The Greatest $1 Million Marketing Campaign in History

Slick, Subtle, & Sneaky

There is a saying that goes… extraordinary claims require extraordinary evidence.

This could be considered to be a controversial statement being that the bar for evidence fails to retain its uniformity which goes against the consistency of the scientific method. Nevertheless, in certain cases, claims that can essentially change the perception of reality itself could be required to undergo rather intense scrutiny. A key component in scientific rigor is statistical power generated by a substantial level of data points. In scientific publishing, meta-analysis provides significant statistical power being that these publications have the ability to combine results from multiple studies rather than just one.

As we are all aware of… scientists can be subject to biases and it is much easier to publish fraudulent results in one specific study in comparison to fraudulently publishing a meta-analysis that includes dozens if not hundreds of studies with thousands of data points.

In the field of psychology, there are over 2,400 peer-reviewed journals. Within academic circles, a study’s credibility is not based on solely whether it passes the peer-review process. It is the reputation and impact factor of the specific journal that are key factors regarding the attention and respect that the paper receives. The term “impact factor” is what is used to quantify the journal’s metric which is determined by the average number of times its articles are cited in a specific year.

The American Psychologist Journal has a 5-year impact factor of 12.8 which places it within the top 3% most prestigious journals in the field of psychology.

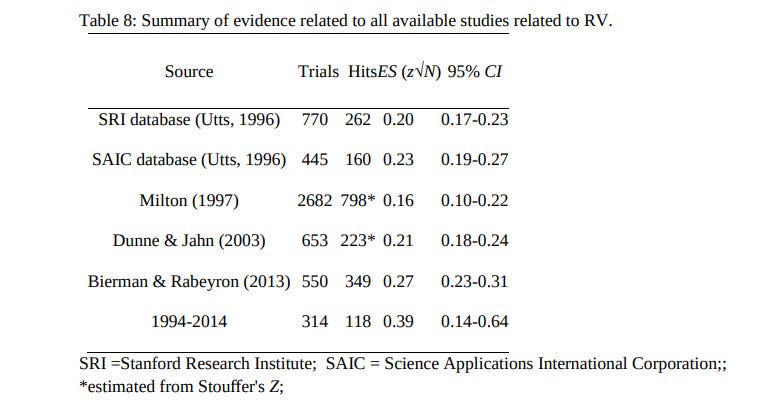

In 2018, professor Etzel Cardena from Lund University in Sweden published a meta-analysis in the American Psychologist Journal regarding the field of parapsychology (PSI). Dr. Cardena’s analysis would include various topics regarding PSI phenomena such as remote viewing, ganzfield, distant healing, presentiment, PSI dream studies, precognition, remote influence, micro-pK, and the global consciousness project. The paper would include the results of thousands of trials published in hundreds of studies including research at the Stanford Research Institute (SRI), Science Applications International Corporation (SAIC), Duke University, and Princeton University. The conclusion of the meta-analysis was as follows: “The evidence provides cumulative support for the reality of psi, which cannot be readily explained away by the quality of the studies, fraud, selective reporting, experimental or analytical incompetence, or other frequent criticisms. The evidence for psi is comparable to that for established phenomena in psychology and other disciplines, although there is no consensual understanding of them.”

When it was first published, this meta-analysis was celebrated among many long-time researchers and supporters in the field of PSI due to the perception that this would be the paper that changes everything. Theoretically, this publication had all the qualities that any fair-minded critic would presumably concede to as being legitimate… high-impact factor journal, credentialed author, and a thorough meta-analysis including hundreds of studies.

Unfortunately, the paper failed to make the sort of impact that PSI insiders had been anticipating. This was rather predictable as someone who is vehemently opposed to acknowledging a truth… any truth for that matter… can simply refuse to look at the evidence no matter how significant or comprehensive it can be.

It is a sickness of the human psyche to operate in this manner.

The meta-analysis written by Dr. Cardena included thousands of trials in hundreds of studies over decades costing well over $30 million (Carey et al., 2007; Smith et al., 1995). However, even with all these resources and reputable organizations conducting the research, it failed to make a serious dent in the public perception of PSI that continues up until today.

This is due to the fact that public perception was already ingrained… not from scientific research disproving any data points but rather by a magician named James Randi. This magician publicly offered a $1 million prize for nearly 20 years (1996-2015). The dominant narrative is that Randi scoured the earth far and wide looking for the most skilled “PSI practitioners”… but somehow they all failed to display their abilities under his “rigorous” conditions. To take it a step further, Randi would also discredit any and all research in the field of PSI helping further mold the narrative that none of the data generated by the entire field was worth looking into. A piece by Mitch Horowitz outlines some of Randi’s tactics to discredit the entire field.

Here is an excerpt from the piece:

Another case was Randi’s yearly “million-dollar challenge,” often held in Las Vegas, in which he tempted psychics with a cash prize. For years it was an annual charade to which virtually no serious observer or claimant would venture near. Journalist and NPR producer Stacy Horn, who wrote about Rhine’s lab at Duke University in her 2009 book Unbelievable, queried Randi in June 2008 about his million-dollar prize. She told me:

I had an exchange with Randi because I was going to have the following sentences about his million-dollar prize in my book:

“To date, Randi’s million-dollar prize has not been awarded, but according to Chris Carter, author of Parapsychology and the Skeptics, Randi backs off from any serious challenge. ‘I always have an out,’ he has been quoted as saying.”

I sent that to Randi to ask him if he really said that. …He wrote back saying that the quote was true, but incomplete. What he really said was, “I always have an ‘out’ — I’m right!”

It seemed like he thought he was being amusing, but I didn’t really know a lot about him yet. But it also seemed to indicate that the million-dollar prize might not really be a serious offer. So I asked him how a decision was made, was there a committee and who was on it? …He replied, “If someone claims they can fly by flapping their arms, the results don’t need any ‘decision.’ What ‘committee’? Why would a committee be required? I don’t understand the question.”

At that point I wrote him off and decided to not mention his prize in my book since it just seemed like a publicity stunt for Randi.

The most amazing thing about this all is that the $1M was never actually spent… it was only dangled. It is assumed that the prize amount was legitimately secured in a bank account due to the support of UUnet founder, Rick Adams. Nevertheless, this has to be the most effective “$1 million marketing campaign” in the history of the world being that the effects are still felt globally today. This prize was developed not by a scientist… but by a magician who managed to influence culture including scientists themselves!

When people claim that there is no evidence of proof of PSI… it usually includes the fact that the $1M prize was never claimed. This is regardless of the Cardenas paper and any ongoing or future studies in the field of the “supernormal”. While the $1M prize doesn’t currently exist being that Randi is now deceased and discontinued the prize in 2015, a $500k prize does exist which is similarly cited as being evidence that PSI is non-existent.

It is an intriguing case study of marketing and sociological impact far beyond the monetary value involved. People who are normally “scientifically and data oriented” will put massive weight over a magician’s prize compared to a scientifically generated meta-analysis.

$1 million dollars can influence the entire perception of reality.

Think about that.

With the billions of dollars held by private wealthy citizens all across the world… a measly $1M (now $500k) controls the perception of the masses.

When we state “perception of the masses”… we are referring to the promotion of materialism. Any and all PSI or supernormal experiences are written off as merely psychiatric conditions in which individuals hallucinate.

Near-death experience? hallucination.

Telepathic experience? hallucination.

Alien encounter? hallucination.

Out-of-Body Experience? hallucination.

Thinking that you can transfer “energy” to another for healing? hallucination.

Psychedelics? Obviously hallucination.

You get the point.

It is the ingraining of the mindset that nothing exists outside of our biological meat suit and brain activity. We have merely evolved over eons of time into strange creatures that use technology to navigate life eventually succumbing to the infinite darkness that is death.

Sure, there is much beauty to behold within the baseline human experience that falls well within the materialism framework such as the birth of a child, falling in love, eating dim sum, and peering over Niagra falls. However, none of this gives us any knowledge of the greater reality and what could be. The fear of death lingers in the back of the mind for most embracing the perspective of materialism. While there is the troublesome aspect of this viewpoint regarding stripping away any and all of the underlying magic of existence… our perspective is that it is simply pushing an incomplete and inaccurate model of reality. This has it’s own inherent danger as massive resources are deployed based on this incomplete world model.

99.99% of the time, 99.99% of people share a general, consensus baseline reality.

However, that .01% of abnormal experiences can seem to have quite an impact on the individuals themselves. Unfortunately, due to the discomfort that many have in sharing these experiences for fear of ridicule or being labeled as having “hallucinations” (largely due to vocal skeptics like Randi), these experiencers generally shy away from publicly sharing them. This is a negative for society being that it suppresses the possibility of developing a comprehensive world view and map of what is possible.

The endeavor of “proving PSI” is less about any of the specific abilities and more about what it alludes to.

Unequivocal proof of PSI could lead to the embracing of awe and wonder regarding the natural world. It could inspire people to dig further into what their true purpose on earth is and why we exist at this very moment in time. It could lead to massive breakthroughs in societal emotional well-being and alleviate some of the distress associated with death and even general hardship. It could give us deep insights as to mechanisms of rapid, spontaneous remission of severe disease that has been reported since the beginning of time with a mystical-type theme.

The impact all depends on forces that drive the narrative. As we have seen in recent times with the UAP discourse… some factions are massaging the message that advanced civilizations are a threat to society while other factions claim to know that these civilizations are undoubtedly benevolent. Thus far, neither has been proven correct or incorrect as the speculative discourse continues.

While we titled this piece, “The greatest $1 million marketing campaign in history”… we also must acknowledge the complete lack of transparency and ethics associated with this venture. There was no transparency behind the Randi initiative to test for PSI. What we are referring to regarding transparency is publicly laying out the specific equipment, environment, time frame, and specific necessary end-points that would be required as a legitimate display of PSI to win the prize. There has been much criticism of Randi claiming that he constantly “moved the goalposts” in order to remain evasive with his prize allocation. It seemed to many that he merely used the $1M prize as a public relations stunt rather than a legitimate quest for truth. Unfortunately the current group (The Center for Inquiry) offering the $500k prize for proof of PSI seems to operate similarly in terms of transparency.

The importance of transparency is that there are subjects who view PSI abilities similarly as those who engage in athletics. The importance of training and developing the neural circuitry for a specific event is key in terms of optimizing the outcome. Sure, you could advise someone to “get in shape” to perform well in a general athletic endeavor but disclosing the specific sport they will compete in (ping pong vs. basketball) can easily have a profound effect on their output. This is no different than academia where subjects study for a specific subject and are then tested in an environment they have been made aware of well in advance. There is no reason for PSI testing to not be afforded the same type of transparency.

Ethically speaking, Randi was compromised via bias as he would denigrate scientists and their efforts attempting to sway public opinion. However, everyone is entitled to their perspective and agendas… the only problem is when the public is led to believe the subject is neutral and only seeking truth when this was obviously not the case.

So now what to do?

If $1M can drive such an impact on society… it would seem that allocating a $1M prize with pure public transparency coupled with rigorous design would be the move. Obviously it would need to be presented by a perceived neutral party. A skeptic organization offering a prize for proof of PSI would be equivalent to the Institute of Noetic Sciences (IONS) offering the prize. They would both be considered as platforms that contain inherent bias due to their history in the space of PSI (although IONS has yet to show a lack of ethics in the space).

Postulating further… instead of focusing the prize for one individual that can display PSI, focus on allocating the prize to platforms that can teach scalable methods of PSI. Preferably and ideally platforms that can showcase 10 or more subjects with the same ability. There is strength in teachable protocols and strength in numbers regarding providing evidence of an extraordinary claim.

There could be the possibility that the $1M prize could be allocated at 50% for the organization showcasing the ability of their 10 subjects and 50% to research on this specific display of PSI at a reputable university. Providing evidence of display coupled with unraveling the mechanisms of occurrence through science would seem optimal in terms of unveiling the ability to the world. It would theoretically be the best of both worlds as it wouldn’t be just prize money but also scientific validation as well.

There is literally nothing stopping a philanthropist or group of philanthropists with the financial means to influence the world in the same manner that Randi did with a new $1M prize. However, the difference would be that the prize would be offered under complete transparency designed under complete transparency with skeptics involved in the process.

One of the challenges is getting skilled mentalists and magicians to agree to publicly be part of the design endeavor. Many are biased and for whatever reason shy away from open dialogue regarding such a venture.

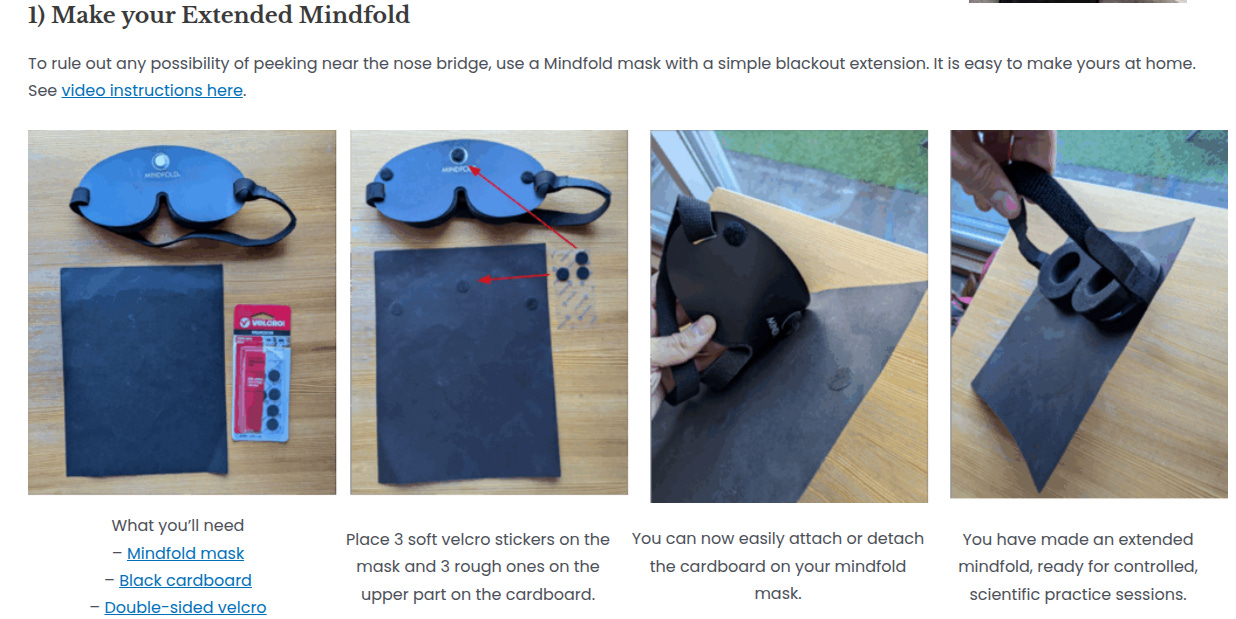

An interesting organization that is attempting to broach a very specific subject of PSI is the Center for the Unification of Science and Consciousness (CUSAC) group founded by author and physicist Tom Campbell. They are focused specifically on the ability of “mindsight” which is the purported ability to perceive without one’s eyes. While this ability has been reported in various periphery formats such as remote viewing and Out-of-Body experiences… the format of validating “mindsight” with the CUSAC group is currently being developed in a transparent manner. Some of the criticisms of subjects claiming to be able to perform “mindsight” with a sleep mask is that there is the potential for cheating. This is a valid criticism as there are clearly small gaps under and to the side of the nose in many of the masks utilized to explore this ability. In addition, mentalists and magicians have showcased the ability to subtly peak through these gaps in the mask even while placing multiple layers/barriers over their eyes.

The CUSAC group alongside physicist/neuroscientist Dr. Àlex Gómez-Marín are currently developing a protocol to ensure that any display of mind sight alleviates the potential for peaking/cheating by using a shield pasted on top of the mask.

While this project is a work in progress, this is the type of transparency necessary to instill trust that other organizations have failed to generate. A quest for truth doesn’t need to take place in the shadows.

The biggest issue we are presenting in this piece is simply the imbalance in terms of the impact of resources and efforts.

For example, basic logic would presume that the phenomena of remote viewing (RV) could be debunked with a handful of carefully designed trials and studies. However, what we have is over 5,400 trials to date regarding RV conducted over numerous decades at various institutions. This indicates that there is a signal robust enough that it cannot be ignored. Meta-analysis indicates that RV is a legitimate ability and that certain practitioners can develop this skill set at a greater accuracy rate than others. However, the tens of millions of dollars with dozens of researchers to formally study this ability is completely discarded by the public due to a counter faction that merely dangles their prize money ($500k/$1M) claiming that RV is pure fallacy.

This is the ridiculous situation we are faced with.

It’s not as if the organization offering the prize money has an annual event in which they provide a transparent application process for PSI practitioners across the world to compete in. There is nothing of that sort so it’s completely possible that the prize money is solely being utilized to discredit an entire field of inquiry.

A society that is molded by devious factions with ulterior motives over extensive scientific inquiry is not modern by definition. This isn’t the pre-2000 days when information could be disseminated and controlled by a few media conglomerates with carefully crafted messaging. We are in the era where pure transparency is embraced and the people clamor for unequivocal truth about a topic.

It’s absurd to postulate that an entity could literally spend $100 million or more on PSI related research, publish reputable studies in high-impact journals and yet still… an organization with a measly $500k can offset this entire effort by dangling a carrot with zero intent of actually feeding the donkey.

It is an interesting moment in time we live in with opportunities only limited by one’s imagination. In 2021, billionaire businessman Robert Bigelow allocated nearly $2 million of prize money regarding an essay contest focused on evidence for survival of consciousness after death. While it was an intriguing concept, the societal impact was tempered since it was essentially preaching to the choir of people who already know or believe in life after death.

It is our opinion that a new Randiesque prize of sorts would have had much greater impact on the perception of PSI and even life after death.

P.S. Some have hinted that if PSI abilities were developed and promoted at a large scale, that society itself could potentially collapse due to the lack of privacy when it comes to financial security/passwords etc. However, one of the greatest psychics of the past hundred years having generated over 14,000 written records of psychic readings predominantly focused on health, Edgar Cayce, had a notoriously difficult if not impossible time utilizing his ability to locate oil wells in the early 1900s. For whatever reason, it seems that there are some strange psychic distortions when it comes to the ability and financial shortcuts.

Great article and 100% agree on the incentives for goal post moving/not running well the Randi challenge or the current one. However, I think it's important also to explore, stress test (the structure of the elusiveness may be testable), and publicize MPI as a possible way that PSI works (strong and real in meaningful closed bounded systems and weak in the lab/prizes). Because if it is correct, then this neutral challenge, if properly and rigorously run, and with a strong enough signal to satisfy skeptics, may end up not being winnable - and may ironically serve to actually bolster the "all PSI not real" perspective unless the MPI perspective is well understood. (This is true of all attempts to try and conclusively and rigorously prove PSI signal including social-rv, telepathy tapes, university studies, etc.). Although, perhaps it won't really make the situation much worse off if a good result doesn't occur so maybe attempt might be still worthwhile. It also might be interesting to brainstorm on whether there might be some kind of MPI-friendly challenge (but don't know what that might look like). Also may have big implications for scientific studies on energy healing and even the replication crisis in regular psych/some medical experiments.

Some notes on this from chat:

Yeah, MPI would absolutely say: this kind of challenge is more likely to arm skeptics than to disarm them.

1. What MPI predicts will happen in a prize-style test

From MPI’s viewpoint:

Strict, repeatable, signal-like tests are exactly where psi-effects are expected to decline.

Lucadou shows that if the system is governed by non-local (entanglement-like) correlations, then in strict replications the effect size should fall roughly like 1/√n, and meta-analyses do in fact show this pattern. The authors of one big PK meta-analysis interpreted that decline as: “there is no real PK effect, it’s just artefact / selective reporting.”

Proof‑oriented experiments are called out as a dead end.

Lucadou explicitly concludes that, given this pattern, “it seems not useful to continue the research strategy of ‘proof‑oriented’ experiments, because a strict replication is the best recipe to destroy the effect.”

A high‑profile, high‑stakes $2M challenge is the archetype of a proof‑oriented experiment.

Heavy observation and documentation suppress autonomy and uniqueness.

MPI generalizes the “uncertainty relation”:

Effect‑size of a psi phenomenon × quality of documentation < constant.

And in the poltergeist/RSPK work, Lucadou says a system “can only behave as it pleases as long as one does not observe it with great care. A predetermined system loses its autonomy and because of that its ability to be unique as well.”

A prize test maximizes careful observation and pre‑determination.

So, from MPI’s own math and case analyses, the most likely outcome of a carefully monitored, endlessly replicable, high‑documentation psi challenge is:

Small or null effects in the official outcome variables,

with any entanglement‑like correlations either suppressed or displaced into places no one is looking.

2. How skeptics will naturally use that

Now combine that with the social side:

Skeptics already interpret the decline of effect size with more trials as evidence that “there is no real effect.”

MPI says: that decline is exactly what you expect if psi is non‑local and cannot be used to send a signal (NT‑axiom);

skeptics say: that decline shows it’s all noise and bias.

A big, neutral, well‑designed $2M challenge that yields:

No clear success → becomes the new flagship “proof” that psi doesn’t exist.

Ambiguous or marginal success → is easy to reframe as: “look, once they tightened controls, the big spooky effects evaporated,” which fits the same narrative.

And because MPI predicts that proof‑oriented, heavily controlled replications are the worst possible context to look for robust psi, you’ve essentially:

Set up an experiment in a regime where your own theory says the effect should be minimal,

and then handed the resulting nulls to critics as headline evidence.

So yes: from an MPI perspective, a “neutral,” high‑stakes challenge is not just unlikely to prove psi—it is structurally set up to generate exactly the kind of clean null result skeptics can brandish as ammunition.

If you wanted to avoid that trap, MPI would push you toward correlation‑matrix style studies, rich real‑world systems, and designs that acknowledge elusiveness up front, rather than putting all the rhetorical weight on a single, proof‑oriented showdown.

3. Are Cardeña’s results in tension with MPI?

They would be in tension if Cardeña had shown something like:

Large, stable effect sizes that don’t fall with more trials,

Easily repeatable on demand,

Working fine under maximally rigid, high‑documentation, high‑replication conditions,

Suggesting you really can treat psi as a reliable info channel.

That would contradict the MPI decline law and the non‑transmission (NT) axiom.

But what he actually shows is:

Effects are small;

Often strongest in free‑response / altered‑state / meaning‑rich settings and for selected, psi‑conducive participants;

Replication is imperfect and context‑dependent; psi cannot be replicated “on demand”;

Forced‑choice and large‑n micro‑PK paradigms show tiny effects that shrink with run length—exactly the kind of 1/√n decline MPI predicts.

From an MPI standpoint, that’s not counter‑proof; it’s empirical texture that fits the model:

There is something genuine enough to show up across many studies and paradigms.

Yet it stubbornly refuses to turn into a big, clean, industrial‑grade signal.

In fact, Lucadou’s whole move is: given this sort of meta‑analytic “yes, but small, and weirdly elusive” evidence, how do we model it in a way that (a) respects physics (no paradox machines) and (b) matches the observed decline / elusiveness patterns?

4. Paradox avoidance as a foundational motivation in MPI:

In one of Lucadou’s core expositions, MPI is introduced like this:

“The MPI starts from the basic assumption that nature does not allow (intervention) paradoxes.”

He then immediately turns this into the “two fundamental laws of parapsychology”:

Psi-phenomena are non-local correlations in psycho-physical systems, induced by the pragmatic information that creates the system (organizational closure).

Any attempt to use those non-local correlations as a signal (a classical causal effect) makes the correlation vanish or change.

Those two “laws” are basically:

You can have correlations, but you don’t get to use them to build paradox-inducing machines.

From there, MPI derives things like:

The decline law: effect size must fall roughly like const/√n as you add more trials, so you can’t drive Z-scores arbitrarily high and weaponize psi as a reliable channel.

The uncertainty-like relation between effect size and documentation quality (QDcrit · E < OC), so huge, spectacular effects can’t coexist with arbitrarily strong objectification.

All of that is directly motivated by: if you could lock in a stable, controllable, high-powered psi signal, you could build time-loop / intervention paradox setups; therefore you can’t.

So yes: paradox avoidance is right at the root of MPI’s structure, not an afterthought.